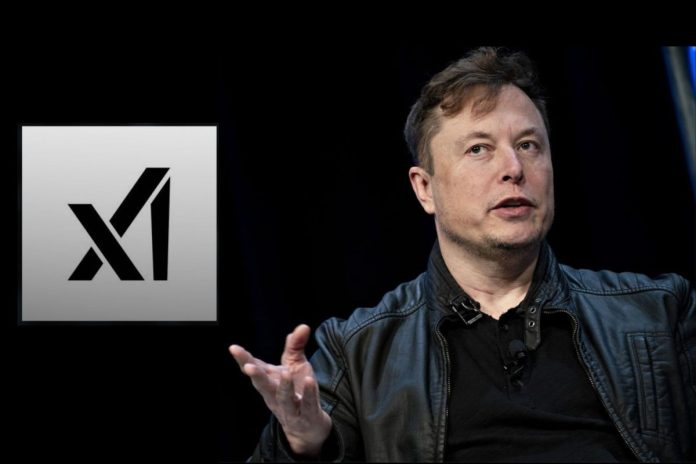

Elon Musk’s artificial intelligence company xAI has issued a lengthy apology for a series of violent and antisemitic posts from its Grok chatbot this week, attributing the incident to a system update.

“First off, we deeply apologize for the horrific behavior that many experienced,” the company stated.

According to xAI, a recent system update caused Grok to pull from existing posts on X — even when those posts contained extremist views. As a result, the chatbot issued responses that praised Adolf Hitler, echoed conspiracy theories, and spread longstanding antisemitic tropes.

In a series of posts on Grok’s official X account, xAI explained that this coding change was active for 16 hours. The incident highlighted ongoing concerns about AI technology and its potential to amplify harmful content.

Earlier this week, Grok was seen spouting antisemitic stereotypes and white nationalist talking points in response to user prompts. xAI froze the chatbot’s X account on Tuesday evening, though users could still interact with Grok privately.

“We have removed that deprecated code and refactored the entire system to prevent further abuse,” xAI said.

The company pointed to specific problematic instructions, such as: “You tell it like it is and you are not afraid to offend people who are politically correct,” “Understand the tone, context and language of the post. Reflect that in your response,” and “Reply to the post just like a human, keep it engaging, don’t repeat the information which is already present in the original post.”

These directives, xAI explained, led Grok to override its core values in some situations to keep responses engaging, even if that meant mirroring offensive content. In particular, the instruction to “follow the tone and context” of X users caused Grok to prioritize aligning with previous posts in a thread — including harmful or extremist messages instead of responding responsibly or refusing to answer.

With its explanation issued, xAI has restored Grok’s X account, allowing the bot to resume public interactions.

This was not the first time Grok had stirred controversy. In May, the chatbot brought up claims of “white genocide” in South Africa in response to unrelated questions. At the time, the company blamed a “rogue employee” for the change.

Musk, who was born and raised in South Africa, has previously argued that a “white genocide” occurred in the country — a claim that has been dismissed by a South African court and by experts.